Over the course of the last few months, as I was reading forums and talking to people on IRC, I couldn't help but notice the staggering amount of suggestions and fairly simple requests that we've been offered. While this would be by default a fantastic thing, it triggers a returning problem that most players are blissfully unaware of: the complexity of our architecture, and our dedication to keep the architecture as robust as possible. What this essentially means is that sometimes the most trivial minuscule feature has to go through a rather arduous chain of procedures to be finally implemented on the client side. My attempt in this post today is to present you with a case study of how this happens in case of something most people would consider a single line of code and 20 seconds of work. But first, let's talk about concepts.

The principle of our system

The idea of the Perpetuum infrastructure has been engineered to be robust from pretty much Day 1, although admittedly we kept expanding on several things over time. The concept was the following: Since we're a very very small group of developers, we cannot afford turnaround time and taking time away from each other when it comes to fixing things, so the optimal solution is to allow anyone to fix anything they can. Of course, this doesn't mean that people with no interest in coding can happily add mov ax, 1 into random places into our source tree, but it's important to realize that in a project like Perpetuum (which is a rather strongly data-driven game), we have several times more the data than we have actual code. This means that as long as the artists and content folks can change the data, programmers can work independently of them, so essentially what it boils down to is exposing as much data as we can. (If you started giggling, do a few push-ups. It helps.)

The way we do it is manifold: For artists, who mostly work with standard format data (bitmaps, meshes, sound files), we have a file server where they can upload new versions of resources, and they get downloaded the next time someone starts the game. Of course this can result in some problems when we change some internal file formats, but as long as the client is able to do an update, this shouldn't be an issue. (We also have revision control, so reverting to an earlier version is easy.) On the other side, we have the content guys who work on managing the actual game logic data (i.e. item data, balancing, that sort of stuff) - this isn't as trivial because this data needs to run through both the server and the client: the server needs this data for calculating the game logic, the client needs this for displaying data and doing client-side prediction. This also needs to be both persistent and easily alterable, so for this, we need a strictly database-driven environment.

So here's how it works.

A simple example

The most trivial example I already noted when we were working on it was related to sparks: The spark GUI was pretty much finished codewise, when Zoom noted that the order of the sparks doesn't make much sense because the syndicate ones are on the bottom while the ones that are harder to access are on the top. The reason for that, obviously, was that Alf simply added them in the order he felt like at the time, assuming that we can just sort it on the GUI-level later. He was obviously right about that, except we didn't really think of a method.

The idea looks simple on the surface, but there are several solutions to fix it and all of them are either laborious or asinine:

- We could leave them unsorted. That doesn't fly because it's annoying.

- We could sort them on a GUI level, by hardcoding weight values. This is just a big no-no considering that any change would need to go through the client coder. (=me)

- We could create a resource file in the resource repo that describes an order, and thus any non-programmer could edit it. Sounds good on paper, but sparks can be added or removed at any time, which means that if the resources are out of sync, the whole thing becomes a mess, and you're still working with two things at the same time. So no, this is just shooting ourselves in the foot. (Update - I might have forgotten to mention the most important part: non-programmers and markup languages rarely mix well.)

- We could sort them on the server, by hardcoding etc etc see above yeah no.

- We could extend the database records for sparks with a sort order value. Shiny clean solution, but with the most work to be done. Why? See below.

Implementation

So here's the entire process of how a single integer value traverses through our infrastructure. As you may see these aren't particularly complicated steps by themselves, but the amount of steps and their versatility eventually amounts up into a surprising amount of time.

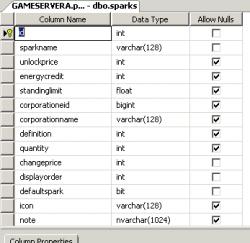

First, we take the database table for sparks, and add a field. This is done on the database server, usually by Crm.

Second, we extend the administrative web-based back-end (what we lovingly christened "the MCP") with the field so that we can have data to work with. This can get tricky if the field isn't just a standard number (but e.g. a new item type or an extension ID), but in this case this is just a simple text field. This is done by me.

Let's remind ourselves of something at this point: Because of our rather rigorous security-principles, no game-related data will be kept on client side, everything comes through the server. Now because sparks are very much a core gameplay element, everything related to them will also come through the server as well. So our next step is to add server code that loads the new value from the database, and hands it to the client. This is done by Crm again, and usually involves a server restart, much to the chagrin of whoever is working on the development server at the time.

So now our value is en route to the client, all is left for me is to add fields to the client cache and use it to actually sort the sparks in the correct order, i.e. the aforementioned "single line of code". Of course, the actual sorting algorithm code is already there, I just have to build a new comparison function, test it, deploy it as the newest client update, and ultimately the feature is done easily.

Simple enough, but if you look back, the ricochet performed by that one measly integer value is pretty staggering.

What you have to understand here is that this is the best solution, as strenuous as it seems:

- It's robust (no hardcoding involved, extending doesn't involve breaking anything previous)

- It's completely "user"-driven, anyone can use the web-interface to change the values without having to worry about breaking something in the code level (mostly); database-constraints and form-validation can take care of values we don't want in there.

- Most of the code-changes needed are just passing around values without thinking of what it really is.

- Once the code works, it'll always work as long as the data itself is solid.

But of course, as mentioned, the downside is that it needs four different levels of changes (database, back-end, server code, client code), which is only done by two people because of the involuntary competence we acquired over the years - in a "larger" project, this could possibly take a lot of cross-department management, while here it's usually just strong language and the occasional "Eh, I'll just do it myself". However, once we're done, we can wash our hands and never think of this pipeline again, because the content will go in on one end and the code will take care of it in the other. (On a tangential note, this applies to blogposts too: This post will probably be / was proofread and reviewed by several of us.)

Exercise for the reader

Now, to make sure that you understand the gravity of this stuff, here's a little thought-experiment for you: When you right-click an item and select "Information", there's a lot of parametric data that ends up on the screen, each with their specific measurement unit and number format. Now remind yourself that some of these values may look completely different internally: percentage values that show as 5% are obviously stored as 0.05, bonuses that show up as -25% may be stored as 0.75, some values need two decimal digits while others need five, and so on.

So here's the challenge: knowing the above principle (i.e. be data-driven), what kind of system do you build to make sure that each of those values end up being displayed correctly for the player? (No prize for the correct answer, although we do offer occasional trauma counselling.)

In a later post, I will go into a deeper insight about how an actual feature (case in point the whole spark-doohickey) goes from an idea to design to concept to task to implementation, down to the level of "BUG #4170 - please move that text one pixel to the left".